In the rapidly evolving world of artificial intelligence, the debate between open-source and closed-source approaches remains a defining tension. This clash is not just about philosophy but about performance, accessibility, and the future trajectory of the industry. The consolidation of AI companies and the increasing dominance of a few major players has only added to the complexity, raising important questions about who controls the technology and how it shapes the world.

The recent release of DeepSeek v3 marks a significant milestone in this evolution, offering performance that rivals industry leaders while maintaining an open-source approach. This model’s combination of strong capabilities and cost-effectiveness challenges the conventional wisdom that cutting-edge AI must come at a premium price point or behind proprietary walls, raising important questions about the future of AI development and democratization.

Open-Source vs. Closed-Source AI: A Growing Tension

The AI field is experiencing a tension between open-source and closed-source approaches. This tension is notable in the LLM space, where open-source options promote accessibility, while closed-source models often emphasize performance and security. The choice depends on user needs and priorities.

Open-source models, such as DeepSeek, offer public access to their source code, model weights, parameters, architecture, pre-trained models, and training scripts. This fosters collaboration, transparency, and innovation by enabling researchers and developers to contribute, modify, and build upon the model. Developers can learn, build upon existing work, and solve problems together. Users can inspect and verify systems, enhancing trust and accountability. Conversely, closed-source models, such as those from OpenAI and Google, keep their code proprietary, limiting access and customization.

One key aspect of this tension is the community and ecosystem supporting each approach. Open-source models thrive on a broad, collaborative community of developers and users who contribute improvements and share knowledge. In contrast, closed-source models often rely on more restricted ecosystems but may offer certified partners and services.

As generative AI evolves, the preference for open or closed source may shift, though both could remain relevant. Organizations often opt for cheaper open-source models that can be deployed on local infrastructure if privacy and security are especially important, although off-the-shelf performance is generally lower.

DeepSeek: An Open-Source LLM with Competitive Performance and Cost Efficiency

Developed by DeepSeek AI, the DeepSeek models are known for their impressive performance and cost efficiency. The 67B Base model surpasses the Llama2 70B Base in areas like reasoning, coding, mathematics, and understanding Chinese.

DeepSeek LLM 67B Chat excels particularly in coding (HumanEval Pass@1: 73.78) and mathematics (GSM8K 0-shot: 84.1, Math 0-shot: 32.6). Additionally, it achieved a score of 65 on the Hungarian National High School Exam.

DeepSeek-V3, which features 671 billion parameters and utilizes a Mixture-of-Experts (MoE) architecture, enhances neural network activation efficiency, thereby lowering hardware costs. Innovations such as Multi-Head Latent Attention (MLA) and multi-token prediction boost its capabilities, allowing it to outperform other open-source LLMs and compete with top proprietary models.

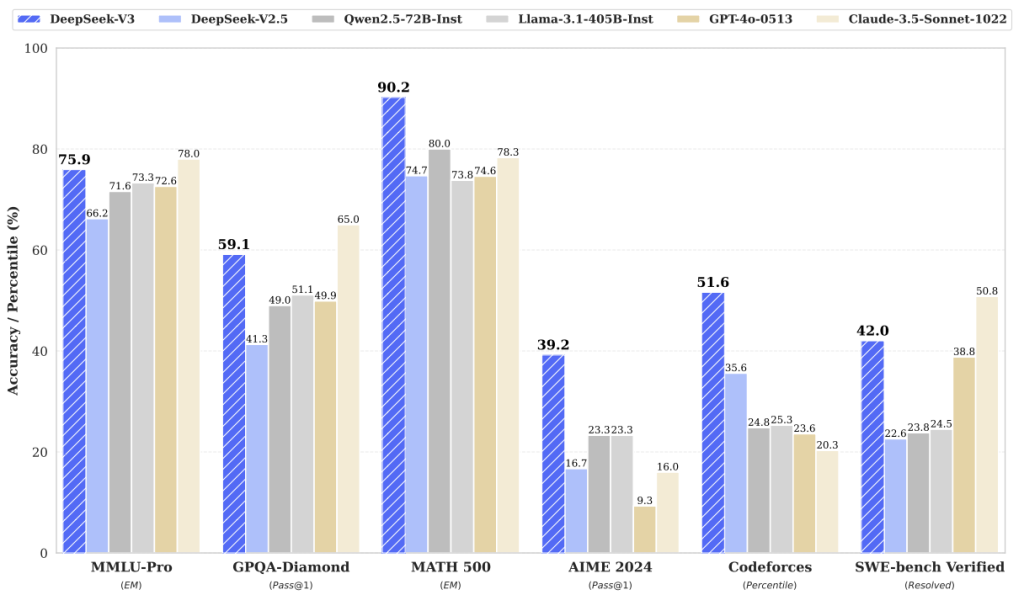

Benchmark performance comparison of DeepSeek-V3 and its counterparts

Source: DeepSeek-V3 Technical Report

DeepSeek-V3: Price Competitiveness

DeepSeek-V3 is remarkably cost-effective, being trained for $6 million using 2048 GPUs over two months. It required only 2.8 million GPU-hours, in stark contrast to Llama 3 405B’s 30.8 million GPU-hours, challenging the belief that high LLM performance necessitates massive investment and extensive training time.

DeepSeek AI has implemented a competitive API pricing structure for DeepSeek-V3.

| Metric | Price (USD) | GPT-4 (USD) |

| Input (cache hit) | $0.014 / 1M tokens | $30 / 1M tokens |

| Input (cache miss) | $0.014 / 1M tokens | $30 / 1M tokens |

| Output | $0.28 / 1M tokens | $60 / 1M tokens |

This pricing structure, combined with the model’s efficiency, makes DeepSeek-V3 a highly attractive option for developers and businesses seeking powerful AI capabilities without the exorbitant costs associated with other leading LLMs.

To illustrate the cost difference, let’s compare DeepSeek-V3 with GPT-4o and GPT-4. GPT-4o is priced at $10 per million tokens for output and $3 per million tokens for input. This means DeepSeek-V3 is approximately 36 times cheaper for output and 214 times cheaper for input compared to GPT-4o. Compared to the standard GPT-4 (GPT-4 Turbo), DeepSeek-V3 is 214 times cheaper for output and 2143 times cheaper for input.

DeepSeek’s competitive performance and cost-effectiveness have the potential to disrupt the current LLM landscape, which is dominated by closed-source models. Its open-source nature, combined with its impressive capabilities, could encourage wider adoption of open-source LLMs and foster greater innovation in the field.

DeepSeek-V3: Significance and Implications

DeepSeek-V3 represents a significant development in the AI landscape. Its open-source nature, combined with its cost-effectiveness and high performance, has several implications for the future of AI:

- Democratization of AI (accessibility and inclusivity): DeepSeek-V3 makes powerful AI accessible to a broader audience, including researchers, developers, and businesses without the resources for costly closed-source models. By offering open access to the model and its weights, it fosters collaboration, experimentation, and innovation, potentially accelerating AI research and development.

- Cost-Effective AI Solutions (affordability and value): The lower training costs and competitive pricing of DeepSeek-V3 can significantly reduce the financial barriers to AI adoption, enabling smaller organizations and individuals to leverage AI capabilities.

- Shift in AI Economics (disruptor): DeepSeek-V3 challenges the existing paradigm where cutting-edge AI models are primarily controlled by a few tech giants. Its emergence signals a potential shift towards more open and competitive AI development. This shift could lead to increased competition, reduced entry barriers for new players, and a redistribution of power dynamics within the AI industry

Ethical and Geopolitical Considerations

The tension between open-source and closed-source AI extends beyond technical and economic considerations, encompassing ethical and geopolitical dimensions.

- Ethical Implications: Open-source proponents argue that transparency is essential for identifying and addressing biases in AI systems, promoting fairness, accountability, and responsible development. Conversely, closed-source advocates counter that their models offer better security and control over potentially harmful applications, mitigating the risks of misuse or malicious exploitation.

- Geopolitical Considerations: The development of AI technologies is increasingly viewed as crucial for national competitiveness and security. Some argue that open-source AI could help nations keep pace with global leaders in AI development, potentially influencing the global balance of power. This raises questions about the role of governments in regulating and promoting different AI approaches and the potential implications for international cooperation and competition in the AI field.

Legal Considerations

Navigating the legal landscape of open-source LLMs is crucial for developers and businesses. Key considerations include:

- Licensing: Understanding the terms and conditions of different open-source licenses is essential for compliance and to avoid potential legal issues.

- Data Privacy: Ensuring that the use of LLMs complies with data privacy regulations is crucial, especially when handling sensitive information.

- Copyright: Addressing copyright concerns related to the training data and the generated output of LLMs is important to avoid infringement.

Comparison of Open-Source and Closed-Source AI Models: Benefits and Drawbacks

The table below provides a side-by-side comparison of the benefits and drawbacks of open-source and closed-source AI models. It highlights key aspects such as cost-effectiveness, collaboration, transparency, customization, security, support, quality control, scalability, potential for misuse, and intellectual property concerns.

| Aspect | Open-Source AI Models | Closed-Source AI Models |

| Cost-effectiveness | Free to use, eliminating licensing fees and reducing financial barriers. | Licensing fees and ongoing costs can be substantial. |

| Collaboration and Innovation | Fosters a collaborative environment, accelerating innovation and development cycles. | Restricted access limits community involvement and diversity of perspectives. |

| Transparency and Trust | Greater transparency, enhancing trust and accountability. | Limited visibility into algorithms and decision-making processes. |

| Customization and Flexibility | Allows for customization to suit specific needs and use cases. | Customization options may be limited, requiring vendor reliance for specific needs. |

| Community and Ecosystem | Benefits from a large, active community contributing to development and support. | Dedicated support from developers, ensuring a smoother experience and timely resolution. |

| Security Risks | Publicly available code can be exploited, leading to security vulnerabilities. | Restricted access to the codebase and controlled environments improves security. |

| Maintenance and Support | Reliance on community support, which may not be as reliable as professional support. | Regular updates, security patches, and dedicated technical support from developers. |

| Quality Control | Fragmentation and inconsistencies in quality due to different versions and modifications. | Strict protocols, rigorous testing, and adherence to guidelines ensure high quality. |

| Training and Deployment | Requires significant infrastructure and resources for training and deployment. | Designed with scalability in mind, backed by robust infrastructure. |

| Misuse and Abuse | Potential for misuse, such as generating harmful content or spreading misinformation. It’s hard to control their distribution and usage. | Similar risks, but typically more guardrails and safeguards in place to mitigate misuse. |

| Intellectual Property Concerns | Challenges in protecting intellectual property rights. | Clear legal agreements and licensing provide legal protection and compliance. |

The Open vs. Closed-Source Debate in AI Development

The ongoing tension between open-source and closed-source approaches will shape the AI landscape. DeepSeek v3’s competitive performance and lower costs challenge commercial providers to justify their prices, potentially making advanced AI more affordable for many. Open-source models enable global developers to iterate and improve rapidly, accelerating innovation beyond what individual organizations can achieve. With 66% market adoption, as reported by SlashData, open-source models are gaining traction. However, concerns regarding data privacy, intellectual property restrictions, and ethical implications need to be carefully considered and addressed.

The ongoing consolidation in the AI industry, as seen by Google’s acquisition of Alter and Nvidia’s purchase of Run.ai, adds complexity to the landscape. As major players vie for control, this could spur increased investment in both open-source and closed-source AI development. Investment in open-source AI development is growing, with private companies in the sector securing $14.9 billion in venture funding since 2020. However, it also raises concerns about stifling innovation and concentrating power. In response, powerful open-source alternatives act as a counterbalance, fueling a tension between centralization and democratization that will shape the industry’s future.

Ultimately, the future of AI likely lies in a balance between open and closed-source approaches. Choosing between open and closed-source AI models is not straightforward. Organizations and developers need to understand each approach, weigh their pros and cons, and decide based on their needs and priorities. By combining the strengths of both, we can create a more innovative, inclusive, and responsible AI landscape that benefits society as a whole.

References

DeepSeek-V3 Technical Report, https://arxiv.org/abs/2412.19437

Andrej Karpathy Praises DeepSeek V3’s Frontier LLM, https://www.analyticsvidhya.com/blog/2024/12/deepseek-v3/

DeepSeek Models & Pricing, https://api-docs.deepseek.com/quick_start/pricing

OpenAI GPT4 API Pricing, https://www.nebuly.com/blog/openai-gpt-4-api-pricing

66% of developers use open-source AI models, and Industrial IoT leads network API adoption, https://www.slashdata.co/post/66-of-developers-use-open-source-ai-models-and-industrial-iot-leads-network-api-adoption

The Foundation Model Divide, https://www.cbinsights.com/research/report/future-of-foundation-models-open-source-closed-source/