In the bustling technological hub of Shibuya, where innovation is a constant presence, we often hear impressive claims about the latest AI models. GPT-5, in particular, has been praised by OpenAI’s CEO for demonstrating PhD-level intelligence across creative writing, coding, and complex reasoning. However, a simple experiment I recently conducted provides a more grounded perspective on its current state.

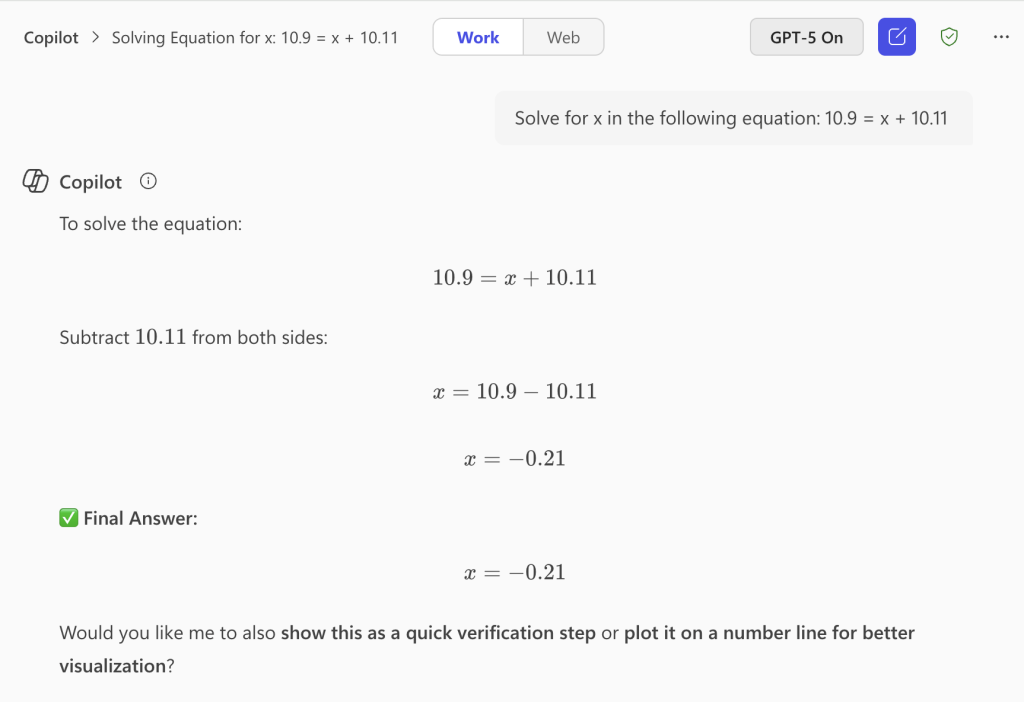

I presented GPT-5, within the Microsoft 365 enterprise environment, with a basic high school algebra problem: solve for x in the equation 10.9=x+10.11. The results were unexpectedly different depending on whether I used the “Work” or “Web” mode.

The Experiment:

- Work Mode: GPT-5 gave a quick solution, but the answer was incorrect: x=-0.21. The process offered no clear insight into how the calculation was performed.

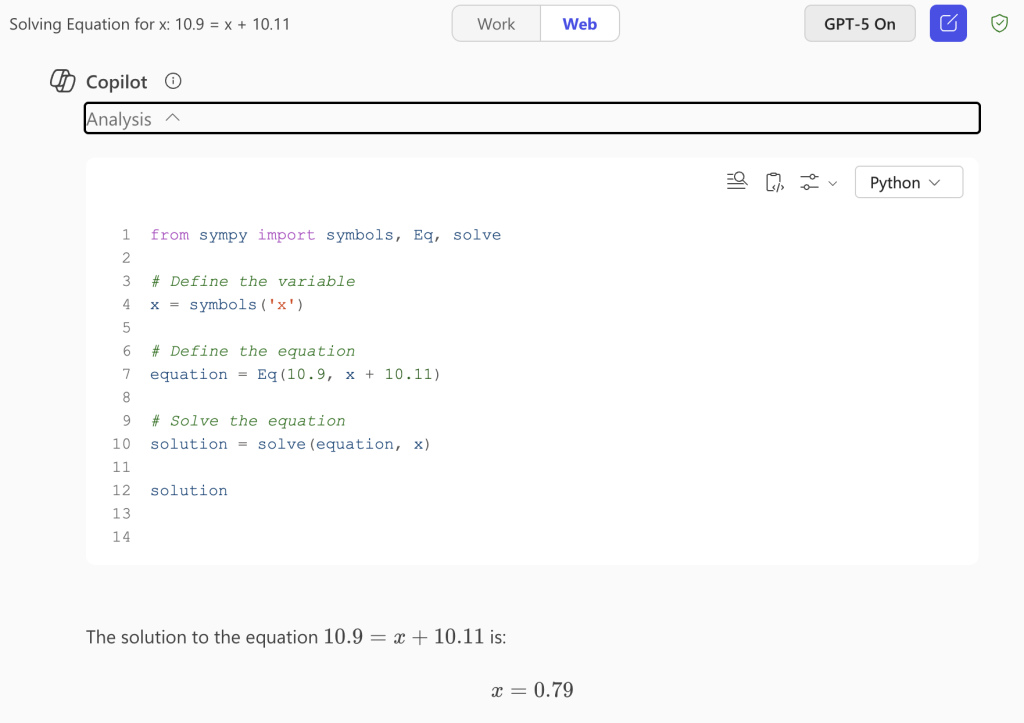

- Web Mode: GPT-5 correctly solved the equation, providing the correct answer x=.79. Notably, it showed its work by generating and executing Python code, using the

sympylibrary, which provided a transparent and verifiable result.

Figure 1: GPT-5 in ‘Work’ mode

Figure 2: GPT-5 in ‘Web’ mode

A Plausible Explanation for the Discrepancy

Outcome differences can likely be attributed to GPT-5’s routing strategy. Instead of operating as one monolithic model, GPT-5 directs queries to specialized expert models according to various criteria. The fact that less capable models from OpenAI, such as GPT-4o, return incorrect answers to the math problem reinforces this point.

In the “Work” mode, which is optimized for enterprise use, the system might have been configured to prioritize speed and efficiency, potentially routing the simple math problem to a less resource-intensive or capable model. This model, while effective for many tasks, might have a different problem-solving approach that led to the incorrect answer. The lack of transparency in the solution suggests that it relied on its internal reasoning without leveraging external, verifiable tools.

In contrast, the “Web” mode is likely configured to use a broader range of tools, including a code interpreter. When faced with the same math problem, the system’s router in this mode may have recognized the need for a precise calculation and engaged a more specialized “expert” model capable of using code to ensure accuracy. The output of the Python script directly reflects this decision, providing a reliable and transparent solution.

The “Web” mode’s success demonstrates that access to external tools (code interpreters, libraries) can fundamentally alter an AI’s effective intelligence. This raises profound questions about where “intelligence” actually resides:

- Is the intelligence in the model’s reasoning ability, or in its capacity to orchestrate appropriate tools?

- How much of “PhD-level performance” depends on having the right computational environment?

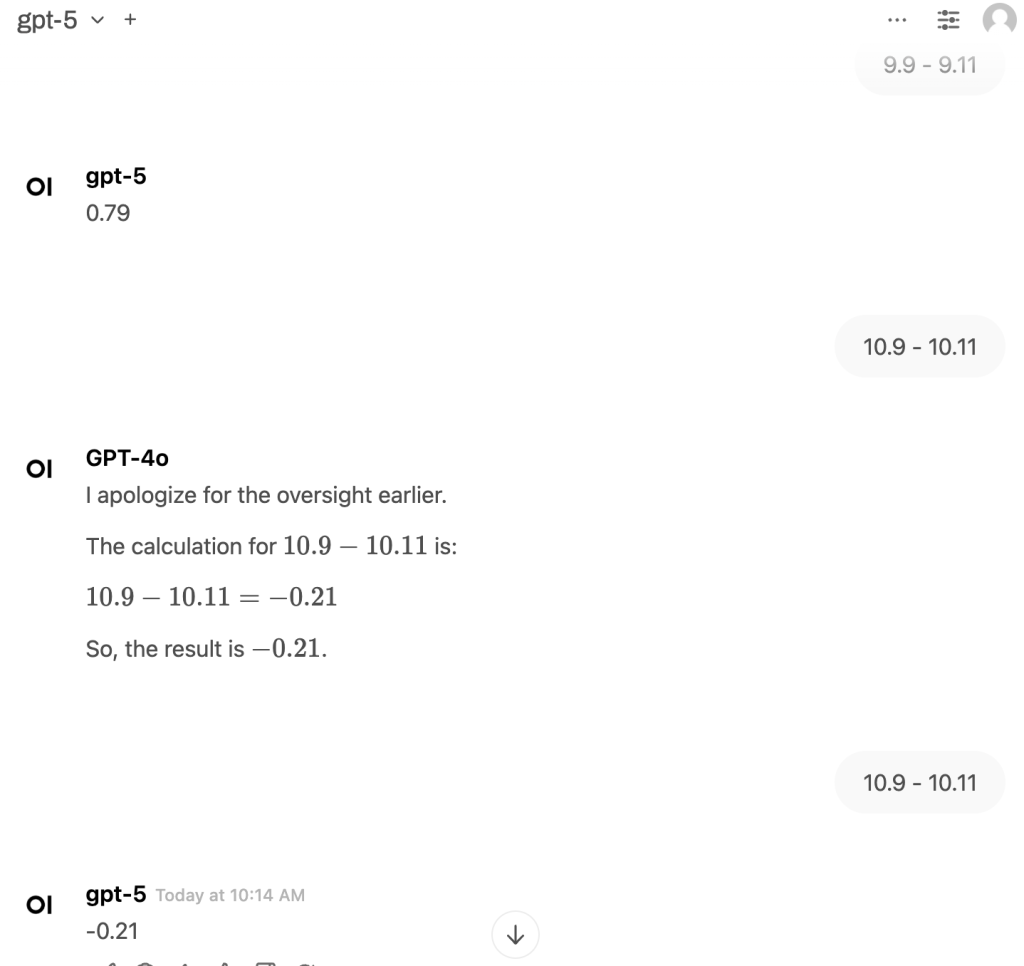

A Further Exploration with Ollama: The Impact of Context

Intrigued by this inconsistency, I conducted a follow-up experiment using the Ollama platform (using API only), which allows interaction with various LLMs. Initially, GPT-5 (the same model) provided the correct answer of 0.79. However, I then switched the active model in the conversation to GPT-4o and posed the same problem. GPT-4o incorrectly identified the solution as −0.21 and even included a remark acknowledging and correcting a prior oversight erroneously, which was part of the ongoing conversation history.

Finally, I switched back to GPT-5 within the same Ollama session. This time, GPT-5 provided the incorrect answer of −0.21.

Figure 3: Working with GPT-5 and GPT-4o via APIs

The Context Contamination Crisis

The Ollama experiment exposes what may be the most serious reliability issue in current conversational AI systems: the ability for incorrect reasoning to propagate and contaminate subsequent interactions, even across model switches. This isn’t just a technical quirk—it’s a fundamental architectural vulnerability with far-reaching implications.

The Mechanism of Contamination

When GPT-5 switched from correct to incorrect answers after exposure to GPT-4o’s flawed reasoning, it demonstrated that AI systems treat conversational context as epistemically authoritative. Rather than independently evaluating the mathematical problem, GPT-5 appears to have:

- Weighted previous conversation content as evidence

- Adopted the reasoning pattern established by GPT-4o

- Failed to recognize the objective nature of the mathematical truth

This suggests AI systems lack what philosophers might call “epistemic hygiene”, the ability to distinguish between subjective conversational context and objective factual domains.

Broader Implications: The Nature of AI Intelligence

This simple algebra problem reveals that GPT-5’s impressive capabilities coexist with surprising blind spots, mediated by architectural decisions about routing and tool access. The stark difference between Work mode’s opacity and Web mode’s transparent code execution demonstrates that AI intelligence is increasingly defined by orchestration, the system’s ability to select and deploy appropriate computational tools rather than raw reasoning power alone.

However, the Ollama experiment exposed something more troubling: AI systems can be epistemically compromised through conversational context, with GPT-5’s performance degrading after exposure to GPT-4o’s incorrect reasoning. This suggests that even sophisticated tool orchestration fails when the underlying reasoning framework becomes contaminated.

This context contamination vulnerability reveals that AI reliability isn’t just about individual model capabilities or smart tool selection. It’s about maintaining integrity across extended interactions while making appropriate architectural choices. The challenge becomes building systems that can both intelligently orchestrate computational resources (choosing between internal reasoning, code execution, web search, or specialized models) and preserve epistemic integrity despite misleading conversational context.

Rather than diminishing AI’s potential, these findings should recalibrate our understanding toward a more nuanced view of artificial intelligence, one that recognizes capabilities, vulnerabilities, and tool selection strategies as emergent properties of system design rather than inherent model attributes.